Facilitating machine learning in Fortran with FTorch

2025-12-18

Precursors

Slides and Materials

To access links or follow on your own device these slides can be found at:

joewallwork.com/pwp/slides/ftorch/2025-12-18_DLR-Seminar

Licensing

Except where otherwise noted, these presentation materials are licensed under the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

Vectors and icons by SVG Repo under CC0(1.0) or FontAwesome under SIL OFL 1.1

Motivation

Weather and Climate Models

Large, complex, many-part systems.

Hybrid Modelling

Neural Net by 3Blue1Brown under fair dealing.

Pikachu © The Pokemon Company, used under fair dealing.

Challenges

- Reproducibility

- Ensure net functions the same in-situ

- Re-usability

- Make ML parameterisations available to many models

- Facilitate easy re-training/adaptation

- Language Interoperation

Language interoperation

Many large scientific models are written in Fortran (or C, or C++).

Much machine learning is conducted in Python.

![]()

![]()

Mathematical Bridge by cmglee used under CC BY-SA 3.0

PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation.”

TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc.

Some possible solutions

- Implement a NN in Fortran (e.g., fiats, neural-fortran)

- Advantages: avoids language inter-operation problem

- Disadvantages: reproducibility issues, hard for complex architectures

- Implement NN inference in Fortran (e.g., ENNUF)

- Advantages: lower maintenace overhead

- Disadvantages: inference only

- Interface with Python ML via Forpy

- Advantages: easy integration

- Disadvantages: harder to use with ML and HPC, GPL, barely-maintained

- Interface with Python ML via SmartSim

- Advantages: generic, two-way coupling, versatile, HPC-friendly

- Disadvantages: steep (human) learning curve, data copying

- Fortran-Keras Bridge

- Advantages: two-way coupling

- Disadvantages: Keras only, abandonware

Efficiency

We consider 2 types:

Computational

Developer

In research both have an effect on ‘time-to-science’.

Especially when extensive research software support is unavailable.

FTorch

Approach

- PyTorch has a C++ backend and provides an API.

- Binding Fortran to C is straightforward1 from 2003 using

iso_c_binding.

We will:

- Save the PyTorch models in a portable Torchscript format

- to be run by

libtorchC++

- to be run by

- Provide a Fortran API

- wrapping the

libtorchC++ API - abstracting complex details from users

- wrapping the

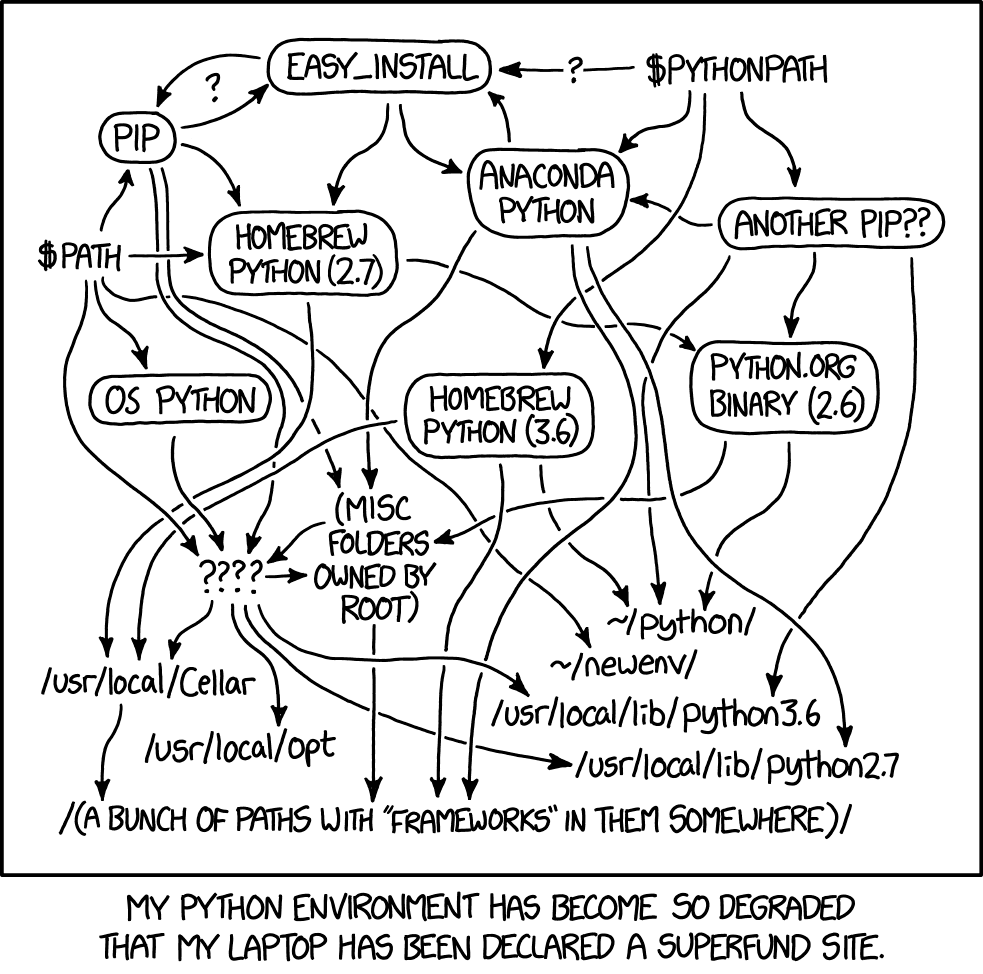

Approach

Python

env

Python

runtime

xkcd #1987 by Randall Munroe, used under CC BY-NC 2.5

Highlights - Developer

- Easy to clone and install

- CMake, supported on linux/unix and Windows™

- Easy to link

Build using CMake,

or link via Make like NetCDF (instructions included)

FCFLAGS += -I<path/to/install>/include/ftorch LDFLAGS += -L<path/to/install>/lib64 -lftorch

Find it on :

Highlights - Developer

- User tools

pt2ts.pyaids users in saving PyTorch models to Torchscript

- Examples suite

- Take users through full process from trained net to Fortran inference

- Full API documentation online at

cambridge-iccs.github.io/FTorch

- FOSS

- licensed under MIT

- contributions from users via GitHub welcome

Find it on :

Highlights - Computation

- Use framework’s implementations directly

- feature and future support, and reproducible

- Make use of the Torch backends for GPU offload

- CUDA, HIP, MPS, and XPU enabled

- Indexing issues and associated reshape1 avoided with Torch strided accessor.

- No-copy access in memory (on CPU).

Find it on :

Highlights - Computation

- Indexing issues and associated reshape1 avoided with Torch strided accessor.

- No-copy access in memory (on CPU).

Find it on :

Some code

Model - Saving from Python

import torch

import torchvision

# Load pre-trained model and put in eval mode

model = torchvision.models.resnet18(weights="IMAGENET1K_V1")

model.eval()

# Create dummmy input

dummy_input = torch.ones(1, 3, 224, 224)

# Save to TorchScript

if trace:

ts_model = torch.jit.trace(model, dummy_input)

elif script:

ts_model = torch.jit.script(model)

frozen_model = torch.jit.freeze(ts_model)

frozen_model.save("/path/to/saved_model.pt")

TorchScript

- Statically typed subset of Python

- Read by the Torch C++ interface (or any Torch API)

- Produces intermediate representation/graph of NN, including weights and biases

tracefor simple models,scriptmore generally

Fortran

use ftorch

implicit none

real, dimension(5), target :: in_data, out_data ! Fortran data structures

type(torch_tensor), dimension(1) :: input_tensors, output_tensors ! Set up Torch data structures

type(torch_model) :: torch_net

integer, dimension(1) :: tensor_layout = [1]

in_data = ... ! Prepare data in Fortran

! Create Torch input/output tensors from the Fortran arrays

call torch_tensor_from_array(input_tensors(1), in_data, torch_kCPU)

call torch_tensor_from_array(output_tensors(1), out_data, torch_kCPU)

call torch_model_load(torch_net, 'path/to/saved/model.pt', torch_kCPU) ! Load ML model

call torch_model_forward(torch_net, input_tensors, output_tensors) ! Infer

call further_code(out_data) ! Use output data in Fortran immediately

! Cleanup

call torch_delete(model)

call torch_delete(in_tensors)

call torch_delete(out_tensor)GPU Acceleration

Cast Tensors to GPU in Fortran:

! Load in from Torchscript

call torch_model_load(torch_net, 'path/to/saved/model.pt', torch_kCUDA, device_index=0)

! Cast Fortran data to Tensors

call torch_tensor_from_array(in_tensor(1), in_data, torch_kCUDA, device_index=0)

call torch_tensor_from_array(out_tensor(1), out_data, torch_kCPU)

FTorch supports NVIDIA CUDA, AMD HIP, Intel XPU, and AppleSilicon MPS hardwares.

Use of multiple devices supported.

Effective HPC simulation requires MPI_Gather() for efficient data transfer.

Publication & tutorials

FTorch is published in JOSS!

Atkinson et al. (2025)

FTorch: a library for coupling PyTorch models to Fortran.

Journal of Open Source Software, 10(107), 7602,

doi.org/10.21105/joss.07602

Please cite if you use FTorch!

In addition to the comprehensive examples in the FTorch repository we provide an online workshop at /Cambridge-ICCS/FTorch-workshop

Applications and Case Studies

MiMA - proof of concept

- The origins of FTorch

- Emulation of existing parameterisation

- Coupled to an atmospheric model using

forpyin Espinosa et al. (2022)1 - Prohibitively slow and hard to implement

- Asked for a faster, user-friendly implementation that can be used in future studies.

- Follow up paper using FTorch: Uncertainty Quantification of a Machine Learning Subgrid-Scale Parameterization for Atmospheric Gravity Waves (Mansfield and Sheshadri 2024)

- “Identical” offline networks have very different behaviours when deployed online.

ICON

- Icosahedral Nonhydrostatic Weather and Climate Model

- Developed by DKRZ (Deutsches Klimarechenzentrum)

- Used by the DWD and Meteo-Swiss

- Interpretable multiscale Machine Learning-Based Parameterizations of Convection for ICON (Heuer et al. 2023)1

- Train U-Net convection scheme on high-res simulation

- Deploy in ICON via FTorch coupling

- Evaluate physical realism (causality) using SHAP values

- Online stability improved when non-causal relations are eliminated from the net

![]()

ICON

CESM coupling

- The Community Earth System Model

- Part of CMIP (Coupled Model Intercomparison Project)

- Make it easy for users

- FTorch integrated into the build system (CIME)

libtorchis included on the software stack on Derecho- Improves reproducibility

Derecho by NCAR

Others

- To replace a BiCGStab bottleneck in the

GloSea6Seasonal Forecasting model

(Park and Chung 2025). - Bias correction of

CESMthrough learning model biases compared to ERA5

(Chapman and Berner 2025) - Implementation of nonlinear interactions in the

WaveWatch IIImodel

(Ikuyajolu et al. 2025). - Stable embedding of a convection resolving parameterisation in

E3SM

(Hu et al. 2025).

- ClimSim Convection scheme in

ICONfor stable 20-year AMIP run

(Heuer et al. 2025) (preprint) - Review paper of hybrid modelling approaches

(Zheng et al. 2025) (preprint) - Implementation of a new convection trigger in the

CAMmodel.

Miller et al. In Preparation. - Embedding of ML schemes for gravity waves in the

CAMmodel.

ICCS & DataWave.

FTorch: Future work

- 6-month resource allocation January-June 2026.

- (Finally) merge online training.

- Properly expose batching of tensors.

- General maintenance.

- Benchmarking experiments.

- Comparison study against similar tools (e.g., TorchFort, SmartSim, fiats).

- Applications.

Join the FTorch mailing list for updates!

FTorch: Summary

- Use of ML within traditional numerical models

- A growing area that presents challenges

- Language interoperation

- Lots of improvements to come in 2026!

Thanks for Listening

Get in touch:

Thanks to Tom Metlzer, Elliott Kasoar, Niccolò Zanotti

and the rest of the FTorch team.

The ICCS received support from

FTorch has been supported by

References